Generative AI requires massive amounts of power and water, and the aging U.S. grid can’t handle the load

Thanks to the artificial intelligence boom, new data centers are springing up as quickly as companies can build them. This has translated into huge demand for power to run and cool the servers inside. Now concerns are mounting about whether the U.S. can generate enough electricity for the widespread adoption of AI, and whether our aging grid will be able to handle the load.Generative AI requires massive amounts of power and water, and the aging U.S. grid can’t handle the load

You can read more Technology articles

“If we don’t start thinking about this power problem differently now, we’re never going to see this dream we have,” said Dipti Vachani, head of automotive at Arm. The chip company’s low-power processors have become increasingly popular with hyperscalers like Google

, Microsoft

, Oracle and Amazon

— precisely because they can reduce power use by up to 15% in data centers.

Nvidia

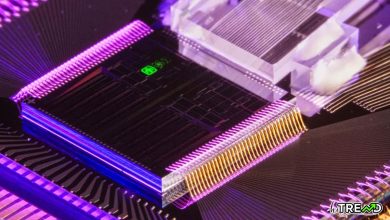

’s latest AI chip, Grace Blackwell, incorporates Arm-based CPUs it says can run generative AI models on 25 times less power than the previous generation.

“Saving every last bit of power is going to be a fundamentally different design than when you’re trying to maximize the performance,” Vachani said.

This strategy of reducing power use by improving compute efficiency, often referred to as “more work per watt,” is one answer to the AI energy crisis. But it’s not nearly enough.

One ChatGPT query uses nearly 10 times as much energy as a typical Google search, according to a report by Goldman Sachs. Generating an AI image can use as much power as charging your smartphone.

This problem isn’t new. Estimates in 2019 found training one large language model produced as much CO2 as the entire lifetime of five gas-powered cars.

The hyperscalers building data centers to accommodate this massive power draw are also seeing emissions soar. Google’s latest environmental report showed greenhouse gas emissions rose nearly 50% from 2019 to 2023 in part because of data center energy consumption, although it also said its data centers are 1.8 times as energy efficient as a typical data center. Microsoft’s emissions rose nearly 30% from 2020 to 2024, also due in part to data centers.Generative AI requires massive amounts of power and water, and the aging U.S. grid can’t handle the load

And in Kansas City, where Meta is building an AI-focused data center, power needs are so high that plans to close a coal-fired power plant are being put on hold.

Follow HiTrend on X