Cerebras challenges Nvidia with new AI inference approach

In brief: The AI inference market is expanding rapidly, with OpenAI projected to earn $3.4 billion in revenue this year from its ChatGPT predictions. This growth presents opportunities for new entrants like Cerebras Systems to capture some of this market share. The company’s innovative approach could lead to faster, more efficient AI applications, provided its performance claims are validated through independent testing and real-world applications.Cerebras challenges Nvidia with new AI inference approach

You can read more Technology articles

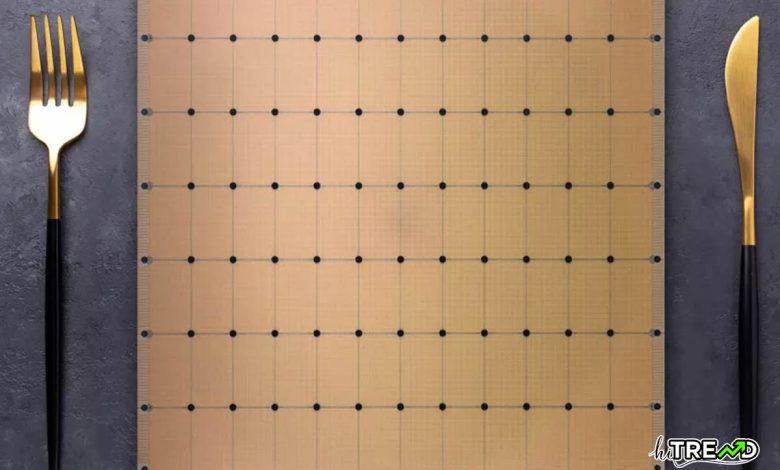

Cerebras Systems, traditionally focused on selling AI computers for training neural networks, is pivoting to offer inference services. The company is using its wafer-scale engine (WSE), a computer chip the size of a dinner plate, to integrate Meta’s open-source LLaMA 3.1 AI model directly onto the chip – a configuration that significantly reduces costs and power consumption while dramatically increasing processing speeds.

Cerebras’ WSE is unique in that it integrates up to 900,000

cores on a single wafer, eliminating the need for external wiring between separate chips. Each core functions as a self-contained unit, combining both computation and memory. This design allows the model weights to be distributed across the cores, enabling faster data access and processing.

“We actually load the model weights onto the wafer, so it’s right there, next to the core, which facilitates rapid data processing without the need for data to travel over slower interfaces,” Andy Hock, Cerebras’ Senior Vice President of Product and Strategy, told Forbes.

The performance of Cerebras’ chip is “10 times faster than anything else on the market” for AI inference tasks, with the ability to process 1,800 tokens per second for the 8-billion parameter version of LLaMA 3.1, compared to 260 tokens per second on state-of-the-art GPUs, Andrew Feldman, co-founder and CEO of Cerebras, said in a presentation to the press. This level of performance has been validated by Artificial Analysis, Inc.

Beyond speed, Feldman and chief technologist Sean Lie suggested that faster processing enables more complex and accurate inference tasks. This includes multiple-query tasks and real-time voice responses. Feldman explained that speed allows for “chain-of-thought prompting,” leading to more accurate results by encouraging the model to show its work and refine its answers.

In natural language processing, for example, models could generate more accurate and coherent responses, enhancing automated customer service systems by providing more contextually aware interactions. In healthcare, AI models could process larger datasets more quickly, leading to faster diagnoses and personalized treatment plans. In the business sector, real-time analytics and decision-making could be significantly improved, allowing companies to respond to market changes with greater agility and precision.Cerebras challenges Nvidia with new AI inference approach

Despite its promising technology,

Cerebras faces challenges in a market dominated by established players like Nvidia. Team Green’s dominance is partly due to CUDA, a widely used parallel computing platform that has created a strong ecosystem around its GPUs. Cerebras’ WSE requires software adaptation, as it is fundamentally different from traditional GPUs. To ease this transition, Cerebras supports high-level frameworks like PyTorch and offers its own software development kit.

Cerebras is also launching its inference service through an API to its own cloud, making it accessible to organizations without requiring an overhaul of existing infrastructure. The company plans to expand its offerings by soon providing the larger LLaMA 405 billion-parameter model on its WSE, followed by models from Mistral and Cohere. This expansion could further solidify its position in the AI market.

However, Jack Gold, an analyst with J.Gold Associates, cautions that “it’s premature to estimate just how superior it will be” until more concrete real-world benchmarks and operations at scale are available.

Follow HiTrend on X